Pathology’s transition from glass slides to digital images has opened a huge frontier, enabling the application of artificial intelligence (AI) to improve everything from workflow efficiency to patient outcomes. However, developing AI systems that achieve these goals requires more than just high-quality digital images. Accurate diagnostic AI also requires accurate ‘ground truth’ diagnoses from which to learn. Unfortunately, in many diagnostic domains, two pathologists can provide differing diagnoses on the same case, and sometimes the same pathologist may provide a different diagnosis when reviewing the same case months later.

Disagreement among medical professionals is called diagnostic discordance. It is the reason why it can be important for a patient to obtain a second opinion for some medical diagnoses. Diagnostic discordance is a common challenge in the field of dermatopathology, particularly when it comes to identifying the deadliest form of skin cancer: melanoma. On the surface, melanoma tumors can appear quite similar to perfectly benign moles. The impact of these borderline cases is felt when you consider that accurately diagnosing which melanocytic lesions contain cancer is crucial for ensuring that patients receive proper and timely treatment.

What’s more, during their many years of training, every pathologist learns their own individual criteria for determining which borderline cases are benign and require observation, and which cases need immediate treatment for cancer. This means that while some cases are likely to be borderline for many pathologists, others are likely to be borderline for just a specific pathologist.

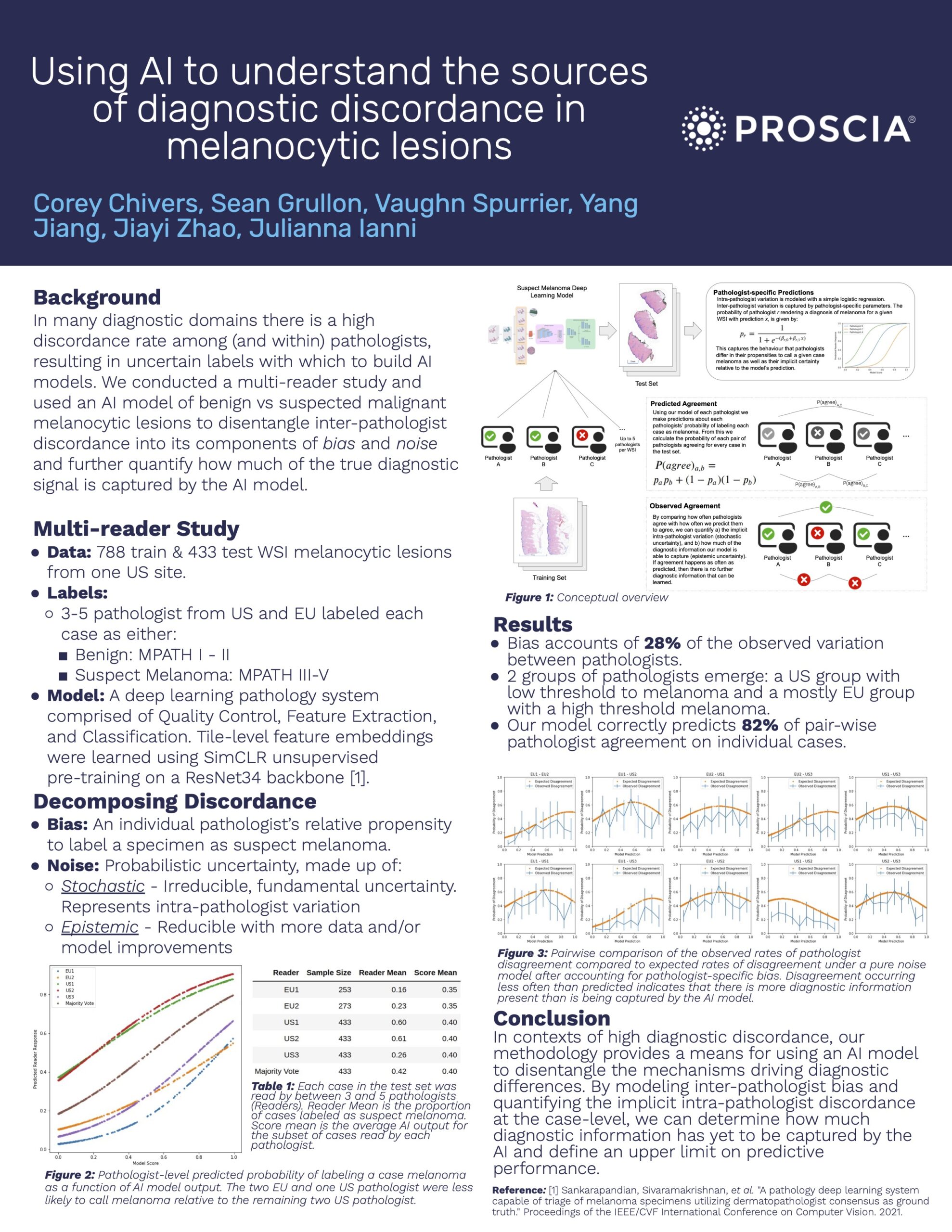

At The 9th Digital Pathology & AI Congress: Europe, Proscia’s AI R&D team presented work that predicts the reactions of individual pathologists to borderline cases, demonstrating the potential of AI to identify cases with the highest diagnostic uncertainty. We conducted a multi-reader study and used an AI model of benign vs. suspected malignant melanocytic lesions to disentangle cases that are borderline for all pathologists from those that are likely to be closest to the border for a specific individual diagnosing pathologist. This enables the model to flag cases that are likely to be the most difficult to diagnose – even after accounting for pathologist-level differences in their implicit diagnostic criteria.

When run on a hold out test set, our AI system correctly predicted 82% of the agreement between pathologists, indicating that we are able to accurately model the majority of diagnostic uncertainty in the test cases. This finding highlights the promise of AI to help high-volume pathology practices route the most diagnostically challenging cases to the relevant specialist, automatically flag a case for additional review, and know when to order special staining and other testing to provide a more complete look prior to pathologist review.

See the full poster that we presented below.